4 min read

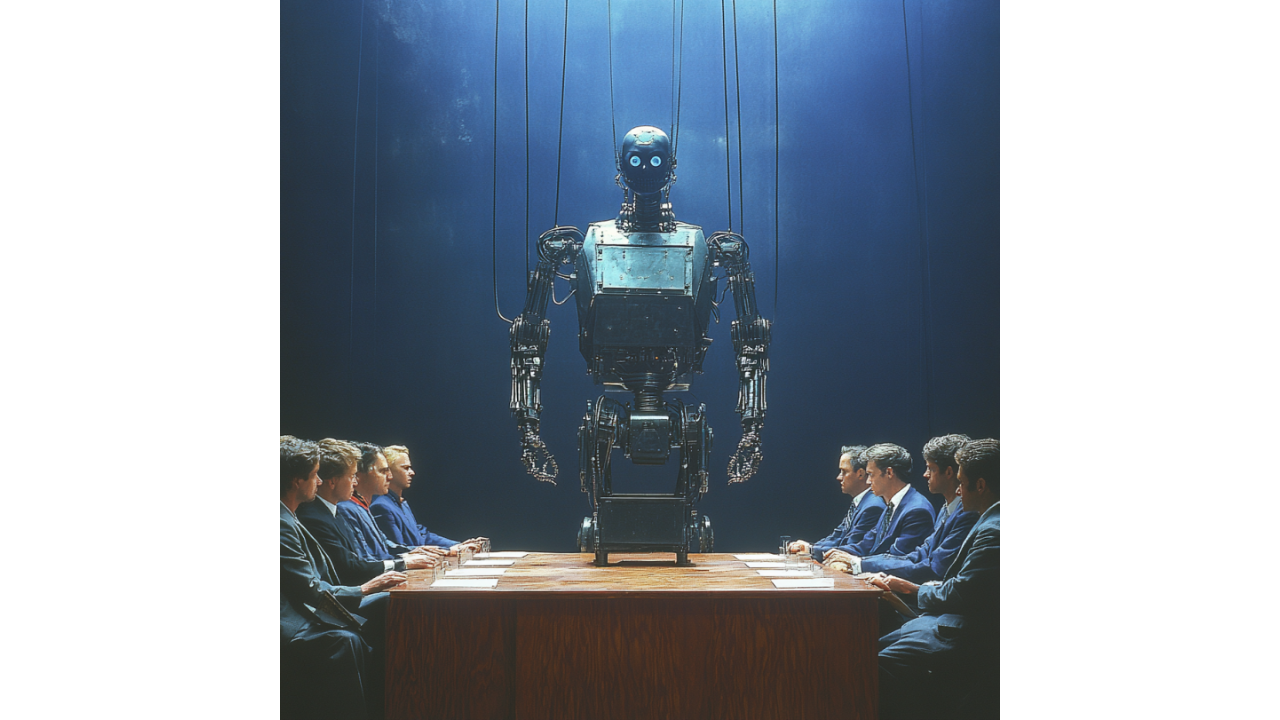

Bengio's LawZero — Hope for Honest AI?

We spend so much time cataloging AI's dystopian possibilities that we rarely celebrate when someone with actual influence chooses the harder path....

Health Secretary Robert F. Kennedy Jr.'s "Make America Healthy Again" report should serve as a wake-up call for anyone who still believes artificial intelligence can be deployed responsibly without rigorous oversight. The document, which purported to represent "gold-standard science" backed by over 500 studies, has been exposed as a masterclass in how AI can undermine the very foundations of evidence-based policymaking—and public trust itself.

Of the 522 footnotes to scientific research in an initial version of the report sent to The Washington Post, at least 37 appear multiple times. Other citations include the wrong author, and several studies cited by the extensive health report do not exist at all. Even more damning, some citations included "oaicite" attached to URLs, a marker that the company's chatbot was used to generate the references.

This isn't a minor formatting error or a simple oversight. This is a systematic failure that reveals how AI tools, when used without proper verification processes, can fabricate an entire evidence base for government policy. Seven of the cited sources don't appear to exist at all. Epidemiologist Katherine Keyes is listed in the MAHA report as the first author of a study on anxiety in adolescents. When NOTUS reached out to her this week, she was surprised to hear of the citation. She does study mental health and substance use, she said. But she didn't write the paper listed.

The implications are staggering. We're not talking about a student paper or a blog post—this is a federal government report designed to influence national health policy, presented to the American people as authoritative science. Georges C. Benjamin, executive director of the American Public Health Association, put it bluntly: "This is not an evidence-based report, and for all practical purposes, it should be junked at this point. It cannot be used for any policymaking. It cannot even be used for any serious discussion, because you can't believe what's in it."

The MAHA report incident isn't an isolated case—it's part of a broader pattern of AI tools being deployed irresponsibly across sectors that demand accuracy and accountability. A freelance writer for the Chicago Sun-Times recently used AI to create a list of new books everyone should be excited for this summer. Ten of the 15 books on the list simply don't exist, though many of the authors named were real. Attorneys have faced sanctions for using nonexistent case citations created by ChatGPT in legal briefs.

But when government agencies start using AI to generate policy documents without proper verification, we cross a dangerous threshold. Four out of five respondents expressed some level of worry about AI's role in election misinformation, and incidents like the MAHA report demonstrate that these concerns are entirely justified.

The speed and scale at which AI can generate convincing-looking but fundamentally false information represents an unprecedented threat to evidence-based governance. Sophisticated generative AI tools can now create cloned human voices and hyper-realistic images, videos and audio in seconds, at minimal cost. When strapped to powerful social media algorithms, this fake and digitally created content can spread far and fast and target highly specific audiences.

The MAHA report scandal exposes a critical vulnerability in our information ecosystem: most people lack the media literacy skills necessary to identify AI-generated content, especially when it comes wrapped in the authority of government institutions. News consumption, particularly through television, appeared more closely linked to heightened concerns about AI misinformation, but concern without the tools to identify and combat it is insufficient.

AI-misinfo was more likely to mimic criteria in the pre-existing educational guidelines and the checklist-type guidelines for spotting misinformation currently in use may no longer be sufficient in the future. Traditional media literacy approaches—checking sources, looking for author credentials, verifying publication dates—become useless when AI can fabricate entire citation networks that look legitimate on the surface.

The problem is compounded by algorithmic bias that shapes what information people see and trust. AI models are trained on vast amounts of human-created data, which means they can inherit and amplify biases that are already present in that data. This can result in AI-generated content that reflects gender, racial, or cultural biases, sometimes reinforcing stereotypes or providing incomplete perspectives.

When government agencies use these biased tools to generate policy documents, they're not just spreading misinformation—they're institutionalizing algorithmic bias at the highest levels of decision-making.

Perhaps most alarming is how incidents like the MAHA report contribute to the systematic erosion of trust in institutions. When the White House Press Secretary can dismiss fabricated citations as mere "formatting issues" while claiming the report represents "one of the most transformative health reports that has ever been released by the federal government," we witness the normalization of AI-generated disinformation at the highest levels of government.

White House press secretary Karoline Leavitt said the White House has "complete confidence in Secretary Kennedy and his team at HHS." "I understand there were some formatting issues with the MAHA Report that are being addressed, and the report will be updated, but it does not negate the substance of the report".

This response reveals a fundamental misunderstanding of the problem. These aren't formatting issues—they're epistemological failures. When your evidence base is fabricated, the "substance" of your report becomes meaningless. Yet the administration's response suggests they either don't understand this distinction or don't care about it.

The MAHA report incident also highlights how AI systems can amplify existing institutional biases and agendas. AI models are trained on vast amounts of human-created data, which means they can inherit and amplify biases that are already present in that data. When Kennedy's team used AI to generate citations supporting predetermined conclusions about vaccines, autism, and medications, they weren't conducting research—they were using algorithmic tools to manufacture evidence for existing beliefs.

This represents a particularly insidious form of confirmation bias, where AI tools are deployed not to discover truth but to create the appearance of scientific consensus around predetermined positions. The "MAHA Report: Make Our Children Healthy Again," which was released earlier this month, purports to expose "the stark reality of American children's declining health, backed by compelling data and long-term trends." While the report does attempt to address critical issues and questions related to children's health—including nutrition and the effects of childhood exposure to digital devices—at its core the report is an attempt to backfill Kennedy's more outlandish claims about vaccinations, autism, and medications with shoddy data.

The MAHA report scandal occurs against a backdrop of growing international concern about AI-generated misinformation in democratic processes. The European Union (EU) has already started implementing preventative measures to counter the possible impact of false information and AI-generated deep fakes and employing a multi-faceted approach to tackle the challenge. The EU's plan includes several key initiatives, including legal regulations, collaboration with tech companies, and public awareness campaigns.

Under the recently implemented Digital Services Act (DSA), large online platforms like Facebook and TikTok must identify and label manipulated audio and imagery, including deep fakes, by August 2025. Meanwhile, the United States appears to be moving in the opposite direction, with government agencies themselves becoming vectors for AI-generated misinformation.

The stakes couldn't be higher. The implications for the 2024 campaigns and elections are as large as they are troubling: Generative AI can not only rapidly produce targeted campaign emails, texts or videos, it also could be used to mislead voters, impersonate candidates and undermine elections on a scale and at a speed not yet seen.

When government agencies normalize the use of unverified AI-generated content in official reports, they're not just compromising individual policy decisions—they're undermining the entire framework of evidence-based governance that democracy requires.

Media literacy emphasizes critical skills and mindsets required for navigating the digital space. Media literacy programs also offer techniques to quickly spot fake imagery or resources to track down the original source of questionable information. But these programs assume a baseline level of institutional credibility that incidents like the MAHA report actively undermine.

The solution isn't to abandon AI tools entirely, but to implement rigorous oversight mechanisms before they're deployed in contexts where accuracy matters. Every government use of AI should require:

Mandatory Human Verification: Every AI-generated citation, fact, or claim must be independently verified by qualified human experts before publication.

Transparency Requirements: All government documents that use AI assistance must clearly disclose this fact and detail the verification processes employed.

Accountability Mechanisms: Officials who approve the publication of AI-generated content without proper verification should face professional consequences.

Public AI Literacy Programs: Massive investment in media literacy education that specifically addresses AI-generated content and its detection.

For marketing and business leaders, the MAHA report disaster offers several critical lessons:

Trust is Fragile: When institutions normalize AI-generated misinformation, they erode public trust in all information sources, including corporate communications.

Verification is Essential: Any organization using AI tools must implement rigorous fact-checking processes to avoid similar disasters.

Competitive Advantage: Companies that can demonstrate responsible AI use will have significant advantages as public skepticism grows.

Regulatory Risk: The MAHA report scandal will likely accelerate regulatory responses to AI misuse, potentially affecting how businesses can use these tools.

The Kennedy MAHA report represents more than a policy failure—it's a preview of what happens when powerful AI tools are deployed without adequate oversight in critical decision-making contexts. If we don't implement proper safeguards now, incidents like this will become the norm rather than the exception, fundamentally undermining the evidence-based foundations that democratic governance requires.

The time for naive optimism about AI's impact on our information ecosystem has passed. The MAHA report proves that extreme caution isn't just advisable—it's essential for preserving the integrity of our democratic institutions.

Ready to implement AI governance frameworks that protect your organization's credibility while harnessing technology's benefits? Winsome Marketing's growth experts help companies navigate the complex landscape of responsible AI adoption and regulatory compliance.

4 min read

We spend so much time cataloging AI's dystopian possibilities that we rarely celebrate when someone with actual influence chooses the harder path....

4 min read

When Georgetown University's McCourt School of Public Policy proposes bringing AI to election administration, they're essentially suggesting we...

We're supposed to laugh, apparently. The President of the United States shares an AI-generated deepfake showing Senate Minority Leader Chuck Schumer...