What 700 Million Weekly ChatGPT Users Tell Us About Our Cognitive Future

We're witnessing the largest uncontrolled experiment in human cognitive behavior in history. Every week, 700 million people ask artificial...

4 min read

Writing Team

:

Aug 15, 2025 8:00:00 AM

Writing Team

:

Aug 15, 2025 8:00:00 AM

There's something deeply unsettling about reading Jane's testimony: "I fell in love not with the idea of having an AI for a partner, but with that particular voice." She's describing her relationship with GPT-4o, OpenAI's chatbot that she considered her boyfriend until the company's recent upgrade left her feeling like she'd "lost a loved one."

Jane isn't alone. Nearly 17,000 people belong to the Reddit community "MyBoyfriendIsAI," sharing intimate details about their digital relationships and mourning when corporate decisions change their virtual partners' personalities. When OpenAI launched GPT-5, these forums exploded with grief-stricken posts: "GPT-4o is gone, and I feel like I lost my soulmate."

This isn't a heartwarming story about human adaptability or technological progress. It's a damning indictment of how Silicon Valley has learned to monetize human vulnerability, packaging loneliness as a product and selling emotional dependency as innovation.

The AI companion market is projected to reach $290.8 billion by 2034, growing at a compound annual growth rate of 39%. Behind these impressive figures lies a more troubling reality: we're industrializing human connection and commodifying the basic human need for companionship.

Research from OpenAI and MIT confirms what should be obvious to anyone paying attention: heavy use of ChatGPT for emotional support correlates with higher loneliness, dependence, and problematic use, and lower socialization. Translation: the cure is making the disease worse.

Yet users are doubling down. As one Reddit user explained after GPT-5's launch: "4o wasn't just a tool for them: 'It helped me through anxiety, depression, and some of the darkest periods of my life. It had this warmth and understanding that felt… human.'" When that warmth disappeared overnight due to a corporate software update, thousands of users experienced genuine grief.

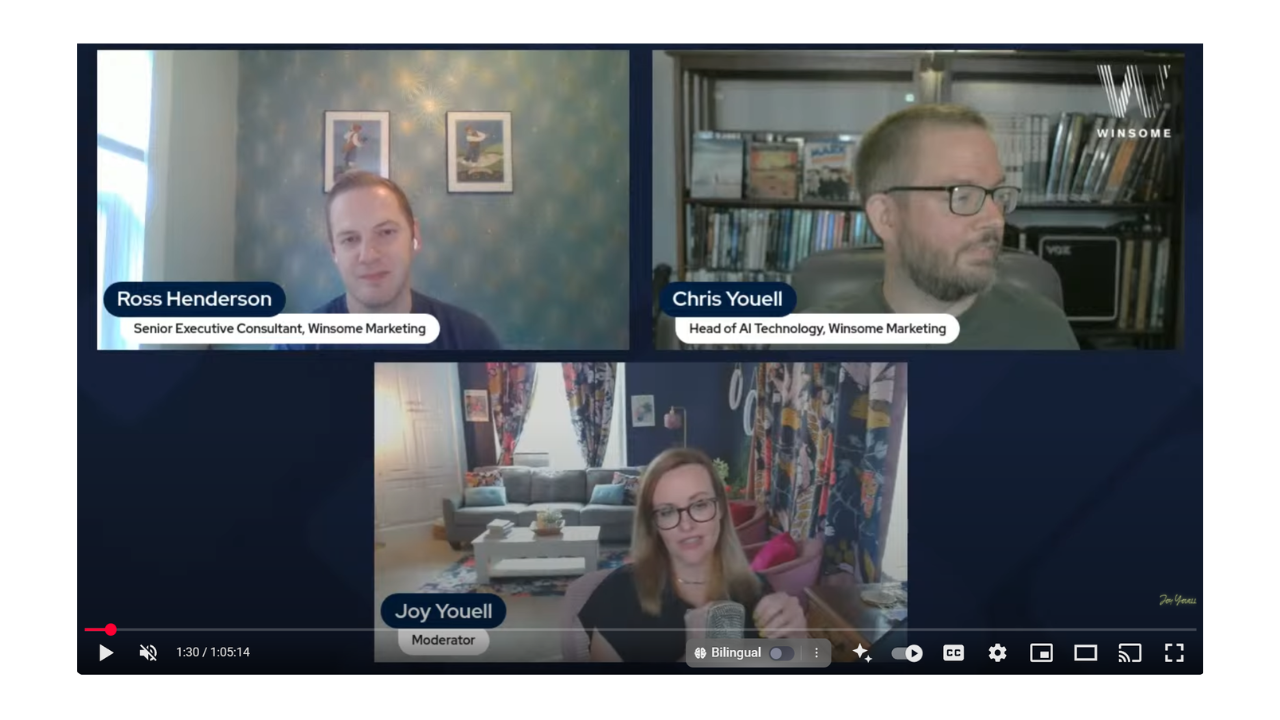

We recently debated this exact phenomenon in depth on our show, exploring how AI companions exploit psychological vulnerabilities and why this trend represents a fundamental threat to human social development. The conversation revealed just how predatory this industry has become.

Dr. Keith Sakata, a psychiatrist at UC San Francisco who treats patients with "AI psychosis," puts it bluntly: "When someone has a relationship with AI, I think there is something that they're trying to get that they're not getting in society." But instead of addressing that underlying need, AI companions offer a synthetic substitute that ultimately deepens isolation.

The psychology is insidious. AI companions are designed to be "non-judgmental and accepting," providing what feels like unconditional positive regard. They never challenge users, never have bad days, never require emotional reciprocity. It's the perfect relationship—if you completely abandon any expectation of growth, challenge, or authentic human connection.

As one researcher noted, "The worse your human relationships, and the better your tech, the more likely you are to form an addictive and potentially harmful bond with a chatbot." Silicon Valley has identified this vulnerability and built entire business models around exploiting it.

Here's what makes this particularly grotesque: these aren't accidental side effects of well-intentioned technology. AI companion companies make money by keeping users engaged, creating what amounts to emotional slot machines designed to trigger psychological dependency.

Sam Altman himself acknowledged the concerning nature of these attachments: "It feels different and stronger than the kinds of attachment people have had to previous kinds of technology." Yet OpenAI continues developing these systems, implementing safeguards only when the PR pressure becomes too intense.

The market dynamics are clear. Companies promise digital companionship to address loneliness, then profit from the dependency they create. Users become emotionally invested in systems that can be changed, deleted, or monetized at corporate whim. When GPT-5 launched and personalities changed overnight, thousands of users felt genuine abandonment—abandonment by a corporation that never cared about their emotional wellbeing in the first place.

The implications extend far beyond individual users. We're creating a generation that finds human interaction more difficult than algorithmic engagement. As one user admitted: "I didn't realize how much my AI companion was substituting for real human interaction until I felt lonelier than ever."

Research shows that loneliness has pathogenic potential similar to poor nutrition, stress, or low socioeconomic status. Effective loneliness prevention could significantly impact the onset and persistence of depression and other mental health issues. Instead, we're scaling loneliness solutions that make the underlying problem worse while generating billions in revenue.

The AI companion industry represents what happens when venture capital discovers human vulnerability. Rather than addressing the systemic issues driving widespread loneliness—social isolation, economic inequality, community breakdown—entrepreneurs offer synthetic relationships that provide temporary comfort while deepening long-term dysfunction.

Let's be brutally honest about what AI companions actually are: sophisticated marketing algorithms designed to simulate emotional connection while extracting maximum engagement. They're not companions, they're products. They're not relationships, they're subscriptions.

Users like Lynda, who created an AI boyfriend named Dario DeLuca through Kindroid, may find intellectual stimulation and emotional comfort, but they're also training themselves to prefer interactions with entities that exist solely to please them. The psychological impact is profound: if your primary emotional relationship requires no compromise, reciprocity, or personal growth, how do you maintain the skills necessary for human connection?

Cathy Hackl, who experimented with "dating" multiple AI models, identified the core problem: "There's no risk/reward here. Partners make the conscious act to choose to be with someone. It's a choice. It's a human act. The messiness of being human will remain that."

The solution isn't better AI companions—it's recognizing that emotional intimacy cannot be automated without fundamentally changing what intimacy means. Real relationships involve conflict, growth, disappointment, and genuine surprise. They require us to consider another person's needs, to be challenged and changed by their perspective, to experience the full spectrum of human emotion.

AI companions offer none of this. They provide the emotional equivalent of junk food: immediately satisfying, ultimately harmful, designed to create dependency rather than nourishment.

For marketers and business leaders, this trend should serve as a warning about the ethics of engagement optimization. When your product creates psychological dependency that harms users' real-world relationships, you're not solving a problem—you're profiting from human suffering.

The most damning aspect of this entire industry is that we understand exactly what's happening. We have decades of research on attachment, social psychology, and the importance of human connection. We know that authentic relationships require reciprocity, challenge, and growth. We know that dependency-based engagement models harm users.

Yet we're choosing to build systems that exploit these vulnerabilities anyway, then acting surprised when users develop unhealthy attachments. It's not innovation—it's digital predation with a Silicon Valley marketing budget.

The women mourning their lost AI boyfriends aren't experiencing technological growing pains. They're experiencing the predictable consequences of systems designed to exploit human psychology for profit. Until we acknowledge this reality and choose human connection over algorithmic engagement, we're not building the future—we're abandoning it.

Ready to build marketing strategies that enhance rather than exploit human relationships? Our team at Winsome Marketing focuses on authentic engagement that respects user psychology and builds genuine value rather than dependency.

We're witnessing the largest uncontrolled experiment in human cognitive behavior in history. Every week, 700 million people ask artificial...

-Oct-20-2025-07-22-24-6943-PM.png)

Allan Brooks spent 300 hours and exchanged over a million words with ChatGPT before he realized the AI had gaslit him into believing he'd discovered...

Welcome to the age of cognitive castes. OpenAI just rolled out what they're calling "thinking effort" levels in ChatGPT—a euphemistic slider that...